Half an Hour,

Apr 04, 2015

Patrick Dunleavy offers this list of ten typical questions that might be asked on your PhD oral exam. I always felt I would have aced my oral exam, but I never got to take it because my examiners did not want me to work on network theory.

So how would I have answered these questions? Dunleavy's post begs a response...

1. What are the most original (or value-added) parts of your thesis?

The semantics of distributed cognition.

In distributed cognition, there is no single location in memory where we might find an idea or concept. Rather, it is distributed across a set of connections between entities (a graph theorist might say it is constituted of a set of edges between nodes).

Like this:

We can say a few things about these distributed representations that are significant:

- They are not representations at all - that is, they do not 'stand for' things or 'signify' things. This set of connections, for example, does not stand for the concept 'couch' nor the word 'couch'.

- They are not propositional - that is, they are not encoded in the form of a sentence, and we do not 'think' in words and sentences (there is therefore no 'encoding' that takes place when we communicate or perceive the world around us).

- They are different for each person. No two activation networks are alike. Indeed, they are even different in the same person over time. There is no static constant that instantiates the concept 'couch' at all.

The best way to think of the web of connections is to think of it as being like the ripples that spread out when you throw a stone into a pond. The initial stimulus causes a cascade of interactions and water molecules bump against each other, a spreading wave of activation that follows the path of least resistance. The waves do not 'represent' anything, they are not 'about' the stone, and indeed, you cannot infer to the existence of the stone merely from the presence of the waves (although Kantian metaphysics is based on exactly that sort of inference).

To make life more complex, we only have one set of interconnected entities and connections between them, andexactly the same set of connections contains multiple concepts or ideas. So in addition to our thought of a couch (symbolized in red) we might have a thought of a dog (symbolized in green).

Like this:

Again, these activations do not stand for anything; they are simply characteristic patterns of spreading activation that occur in the presence of a stimulus. It is typical for the green activations to overlap the red activations. This means that, in certain circumstances, the activation of 'dog' might, by association, cause the activation of 'couch', depending on the overlap of these and other associated concepts.

This is the basis for inferences. As Hume would say, "the far greatest part of our reasonings with all our actions and passions, can be derived from nothing but custom and habit." Our inferences from one thing to another, from cause to effect, from premise to conclusion, are based in repeated iterations of an associative logic, which is based on the mechanics of spreading activation.

There are different ways to talk about this. One way is to describe it mathematically, through the principles of network interactions and learning theories. Each individual entity has its own activation function, which determines how likely it is to be activated by incoming stimuli; each connection has its own weight, which determines how much signal it carries forward, and the creation and destruction of connections in a network, its plasticity, is determined by the learning theory, which derives these new connections from weights and activation functions.

Another way to talk about it (and the way I talk about it in my dissertation proposal) is to talk about it conceptually, by describing the relevant similarity between one concept and another. We might think of this as the degree of overlap between one concept and the next - a very loose statement of the idea would say that 'dog' and 'couch' have a similarity ranking of '3', based on the overlap depicted in the diagram.

It is this network semantics based on similarity that is probably my most significant contribution to the field. It follows the work of people like Amos Tversky in presenting a feature-based account of similarity, but analyzes this in terms of spreading activation in neural networks.

2. Which propositions or findings would you say are distinctively your own?

None.

If you take the theory I've just outlined above seriously, you see that it would be inconsistent for me to say that everything is connected, and then for me to say that some proposition or finding is distinctively my own.

Indeed, I struggle with the idea that my thesis is based in propositions at all. This supposes an idea of a theory or model that is composed of a set of related propositions which are either all consistently and coherently maintained by some logical framework or all derived from a set of observations or measurements on the bases of some sort of inference calculus. And I doubt that either is the case.

At best what I offer is a perspective, a point of view, a set of outcomes as presented from the perspective of this entity given the experiences and observations obtained over a lifetime. But these are all influenced to a significant degree by interactions and communications with others.

My understanding of the word 'Paris', for example, carries with it the influence of every other occurrence of the word 'Paris' I have experienced in my lifetime. It can 'mean' nothing else. Indeed, if I were to say that the meaning of the word 'Paris' were uniquely my own, there would be significant cause for concern, for either I would be asserting some sort of supernatural connection to Truth and Objects, or I would be asserting some sort of egotitocal priority of my own perspective above that of all others,

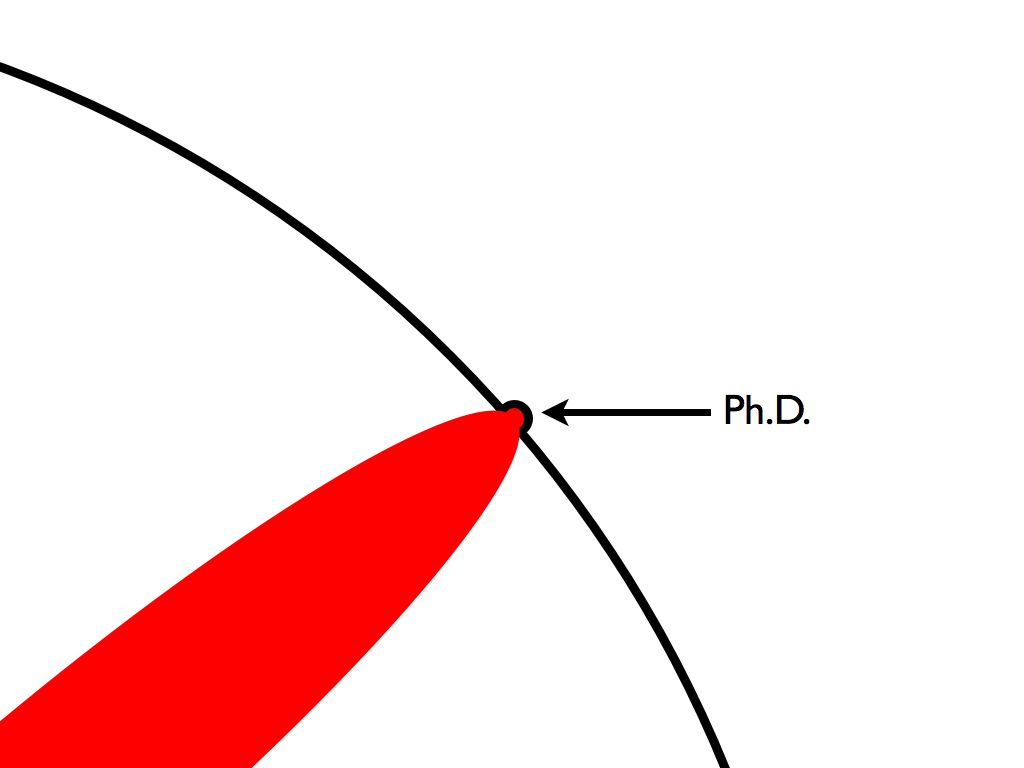

There is this totally false depiction of the PhD thesis, which is this:

which supposes that the individual researcher forges beyond the rest of knowledge on his or her own. But that's not how it works. We do all of our work entirely within the range of what might be called 'existing knowledge'. And when existing knowledge grows or changes, it does so on its own and not by virtue of one unique entity.

3. How do you think your work takes forward or develops the literature in this field?

Honestly, this question is just the same as the previous question, except that it refers to the localization of those propositions or findings.

The presumption in the question is that my new research builds on or extends previous research. It is a perspective or point of view that depicts knowledge as a mountain of propositions or facts, and it suggests that our PhD work is intended to extent that mountain (in an appropriately inferential of evidence-based way).

In my own case, if I had to characterize my contribution, it would be like this: same mountain, different view.

As I said in my answer to the previous question, there is nothing uniquely my own that has been added to human knowledge; I am working withing the same world, the same linguistic framework, the same logic and mathematics, the same sets of properties and qualities, as everyone else.

My contribution, if we must identify one, is that I see it differently from everyone else (of course, everyone sees it differently from everyone else).

One thing that I think is important is that I think that social knowledge and human knowledge are relevantly similar. Specifically, they share the same structure and the same logic. In both humans and societies, the structure of a concept or idea is the same: it is the set of connections between entities.

This general structure - what I called 'Learning Networks' and George Siemens called (more successfully) connectivism - calls into question even the concept of 'literature in the field', because knowledge is not divided between some privileged set of writings, 'literature', and everything else.

At best, the 'literature' might be thought of as some stigmergic activity enabling each of us to contribute our own perspectives to a common object - a human intellectual anthill, of you will. But this thing that we build is distinct from the knowledge we have as a society, and does not hold any epistemic priority over other such network-based objects of knowledge, such as the 747 aircraft or World War II. It's a thing upon which each of us can reflect and obtain our own unique perspective take on collective knowledge.

So I am frankly not interested in developing 'the literature', except to offer the occasional contribution as a social gift, much the way I might contribute to Wikipedia or add to a cowpath in the grass by walking upon it. I don't think the objective of research or scientific enquiry is to develop the literature, or at least, not only to develop the literature. It is to engage, to contribute a life to society.

If I were forced to discuss there I think this is the greatest advance, I would probably say that it is the application of this thinking to education and pedagogy (keeping in mind that if it weren't me, it would surely have been someone).

Educational theory before connectivism is based almost completely on the idea that learning involves the recall of a set of propositions or facts, and is cumulative (much like knowledge in the literature) and stigmergic (analogous to a co-creation of knowledge). Dissuading ourselves of these propositions, and understanding that learning is network based, founded in the development of custom and habit, is the core of my work over the last ten years.

4. What are the 'bottom line' conclusions of your research? How innovative or valuable are they? What does your work tell us that we did not know before?

This is the same question again, but with a slightly different presupposition about the nature of enquiry. The presupposition actually takes two forms: first, as the 'bottom line' as a chain of inference or the conclusion of a logical argument; and second, as the 'bottom line' or net value (to society? to Bill Gates?) of the research.

Both presuppose a directionality to research. Both suppose that research works toward an outcome. And both, in their own way, focus on the utility or value of the research.

As we can no doubt infer what what I have discussed above, directionality is very much a matter of perspective. Sure, it is always possible to depict a progression or flow from one entity to the next to the next in a network. It is always possible to describe a series of activations, one after another, over a slice of time. But the idea that these lead anywhere is surely a matter of opinion.

So at best what this question is asking me to do is to imagine the perspective of some putative observer and to ask what my work looks like from their perspective (this is in fact the actual process I undertake when describing our research program in my current office).

For example, we could ask, "what is the value of knowing that learning is associative rather than propositional?" We can take the perspective of four distinct entities to draw out the implications:

- from the perspective of the individual student, it results in understanding that learning anything is based in a certain set of skills (which I call the 'critical literacies') formed around the idea that knowledge is not cumulative and constructed, but rather based in practice and reflection resulting in habitual recognition of relevant phenomena. In other words, it makes them better learners.

- from the perspective of the teacher, it results in the understanding that teaching does not consist in explaining or describing, because these depend on an already strongly developed association between the words and the concepts, but rather, that we c an at best show (ie., model and demonstrate) actual practice, and have them obtain direct experience and practice.

- from the perspective of the education technology provider, it results in the understanding that networking and interaction are essential components of learning, that new experiences must be based on past experience, which entails the development of personal and experiential learning environments.

- from the perspective of the employer seeking to address skills shortages it results in the shift from a formal class-based outcomes-based learning paradigm to an ongoing informal learning network, hands on support systems, and personal learning program.

These four might seem as large leaps from the answers given to the first and second questions, but they are not in fact so large - of course, depending on your starting point you might have to shift your thinking 180 degrees to get to this perspective, or it might click into place as immediately intuitive or rational.

5. Can you explain how you came to choose this topic for your doctorate What was it that first interested you about it? How did the research focus change over time?

Mostly by accident, by opting for what I thought was obvious, and by seeing opportunity.

The accidents are the vagaries of experience. Early exposure to science fiction in the local library stirred my imagination and led me to want to be a scientist; poverty led me (on the advice of my father) to investigate computers and technology; being overlooked for promotion led me to enrol in university to become a scientist; a full English section in my physics program led me to enrol in philosophy; my first course in philosophy exposed me to David Hume.

Why is this important? Because of my background in computers, I knew that logic is arbitrary, so I was sympathetic with Hume's scepticism. This led me over time to a district of cognitivist methods over time, and hence a Bachelor's thesis defending Hume's associationism and a Master's thesis questioning model-based semantics as arbitrary and unsound.

In my PhD years I worked on the idea of knowledge as based on relevant similarity, developing a logic of association, while at the same time creating huge conceptual maps of ideas, disciplines and a wall-sized history of philosophy. So I was ready with Francisco Varela discussed the essentials of network theory in a lecture at the University of Alberta, and between reading Rumelhart and McClelland and attending the Connectivism conference in Vancouver, had come to see that connectionism and associationism amount to essentially the same theory.

I came into the field of learning technology via distance education at Athabasca University. Given my background, it should be no surprise that I tried several things, including the creation of a Bulletin Board Service (BBS) and co-authoring of an academic MUD. At Assiniboine I created a website and online courses and eventually a learning management system. As I gained experience I found that network principles could be applied to learning technology. I explored the use of content syndication, developed the idea of learning networks, and with George Siemens created the first MOOC. These were all instances of connectionism applied to pedagogy.

My core academic interest lies in understanding knowledge and cognition -- the processes of learning, inference and discovery. Time and experience have refined my early thoughts on the matter, but I have always approached the subject from an empirical and scientific perspective. There are no magic symbol systems, there is no privileged access to nature. There's only experience and a very human - indeed, a very animal - way to comprehend it.

6. Why have you defined the final topic in the way you did? What were some of the difficulties you encountered and how did they influence how the topic was framed? What main problems or issues did you have in deciding what was in-scope and out-of-scope?

If I had to state in a sentence how I define my topic, it is in the previous paragraph: understanding knowledge and cognition through understanding the processes of learning, inference and discovery.

In other words, I am focused first on how we learn rather than what we learn. This is an epistemic choice; a cognitivist or rationalist approach will first describe what we know - "we know language, we know mathematics, we know who we are," etc. My view is that many of these knowledge claims are incorrect. We do not know universal truths, we do not have knowledge of ideal abstracts. We don't, I argue, because we can't.

As a consequence, through most of my career I have found myself in conflict with those who have very specific theories about what we know, and (therefore) how we know it. They depict these knowledge claims as givens and construct and derive theories of learning and pedagogy based on this.

For example, a common line of argument runs as follows: we understand scientific principles, therefore we have knowledge of abstract universals, therefore these must be codified in a physical symbol system, so learning is a process of acquiring and codifying these statements. This creates a view of knowledge and learning that is content-based and focused on the assimilation of a set of these statements by the most efficient means possible.

This is the dominant view, and the position I advocate meets opposition at each stage of the inference.

It results in the need to reframe knowledge and learning from the ground up. It becomes very difficult to decide that something is "out of scope" because each statement of an educational theory varies in importance and meaning depending on which of these perspectives you take. Even the idea of what constitutes a theory - and whether connectivism is one - depends on your perspective.

Throughout the last fifteen years or so I have assembled thousands of small items, hundreds of blog posts, and various talks and longer works. These do not lead from a basis of evidence to a single conclusion. Rather they are point by point observations on a welter of interconnected points, none of which is pure data, none of which is pure theory, all of which constitute an interconnected perspective on knowledge and learning that undermines, and advances an alternative to, the cognitivist view.

7. What are the core methods used in this thesis? Why did you choose this approach? In an ideal world, are there different techniques or other forms of data and evidence that you'd have liked to use?

As someone who wrote a presentation entitled Against Digital Research Methodologies I have to say I didn't use any core methods per se.It's not that I think that science is random. It's rather that, in the words of Paul Feyerabend, scientific method is whatever works.

I've given the talk several times and have tried to express this in different ways. I've talked about my work as being a process of discovery, in which I try things, explore things, and look for patterns that stand out, unexpected significance or meaning, patterns of change, and even observations and inferences.

In other cases I've talked about conduct by research and design (not, by this I do not mean "design-based research", properly so called).

Maybe the best way to approach this question is to take on the question of data and evidence head on. Because the presupposition in a question like this is that the research methodology will either be inductive - that is, inference to a general principle or method based on evidence - or abductive - that is, inference to the best explanation of a body of data or evidence.

In my talks I argue that this depicts research as following a classical theory of science, one in which we express data as a set of "observation sentences" and derive from that a set of theoretical statements, which collectively form a model or representation of reality. Most people understand that in the end we cannot distinguish between observation statements and theoretical statements - this is why 'truth' is often defined as 'truth within a theory T'.

But they are less likely to agree with (or even understand) Quine's second proposition, that reductionismis a dogma. We don't in any way infer from evidence and data to generalization or method. Rather, the data show us only one of two things, either:

- that something exists, or

- that something is possible

Indeed, the bulk of my work takes the form: it can be done, because I've done it. My work, in other words, takes the form of modelling and demonstrating, of giving an example (nothing more) that others can use in their own thinking and their own reasoning.

It is often asked of me: if there are no universal principles or generalizations, then what are those statements that look like universal principles or generalizations? In response, I say that they are abstractions.

But then, continue my questioners, aren't abstractions themselves idealizations based on evidence? And my response is, no, abstractions (and therefore universals and generalizations) are not created by inference from a set of empirical data. They are created from subtractions from empirical data (sometimes even one piece of data).

There are many ways to create abstractions, but I'll illustrate just one: the elimination of extraneous data from two overlapping concepts. Consider the concepts of 'dog' and 'couch'., which I described above. If we keep the connections between those entities where the concepts overlap, and discard the rest, we have a new abstraction, which is whatever it is that dogs and couches have in common.

Like this:

How should we characterize this abstraction? This is where the characterization gets difficult. It might be the idea that dogs like to sleep on couches. It might the that both dogs and couches are things. What it means depends on everything else around it. The more we subtract, the less the overlap, the greater the range of possible things it could be.

8. What are the main sources or kinds of evidence? Are they strong enough in terms of their quantity and quality to sustain the conclusions that you draw? Do the data or information you consider appropriately measure or relate to the theoretical concepts, or underlying social or physical phenomena, that you are interested in?

This question doubles down on the idea that a thesis is created by assembling a body of data or evidence that supports a conclusion. Hence the thesis is evaluated by two criteria:

- the quality of the evidence (which speaks to the soundness of the thesis)

- the inference from evidence to conclusion (which speaks to the validity of the thesis)

I've dealt with inference above. But what are the criteria for good evidence?

Virtually all research that still uses this model will be based on some sort of statistical generalization; the days when we could reason inductively from evidence to conclusion are long since past, a relic of the world when Newton's theories held sway. Perhaps it is true, as Einstein said, that God does not play dice with the universe - but if so, then he is a mean poker player.

Evidence is based on two criteria: quantity and type. By quantity we are asking whether we have enough evidence to draw a statistical inference. There are some fairly well-established principles of probability that are at least as reliable as any other form of inference that will tell us that, for example, we cannot infer from 20 specific instances to a population of 7 billion instances.

But more significant is the question regarding the type of evidence, which is specifically focused around the question of whether the evidence is representative of the population as a whole. That's why you don't just ask your friends how they'll vote when you're predicting an election; chances are, your friends will vote like you do. It should also be why a class of 50 midwestern undergraduate psychology students should not be used as the basis for drawing conclusions about anything, but journals keep publishing the studies.

In my case, none of these matter, because I'm not generalizing (or, at least, I'm trying very very hard not to generalize).

In my case, the question is always: is this example an instance of the thing I'm talking about? If it is, we can say that it exists, and move on, trying to find associated phenomena. If it is not, then what was it that misled me about it? Either way, I learn.

In a world without universal (or even predefined, or even commonly understood) categories, answering questions like this is not a trivial matter. In some cases, it is sufficient to obtain agreement among a group of people that "this x is a y". In other cases, we have to take our time, be pedantic, and show that "this x is precisely a y". Most of the work involved unravelling confused, imprecise or inconsistent categorization. People assume that because we use the same words we mean the same things, while experience suggests that this is often not the case.

In my case, we consider the set of posts, papers and talks, etc., to be both the evidence and the conclusion (this is an instance of what I mean when I talk of 'direct perception'). There is no sense to dividing one set of my posts as the evidence and another as the conclusion. The same happens in the mind: the set of neural activations is at once the evidence and the conclusion. There is no thing over and above the set of neural activations that constitutes the 'thesis' being discovered or proven (or, to be more accurate, if there is, it exists only as an emergent phenomenon, and can only be recognized externally by a third party or observer).

So when we ask what the sources of the evidence are, and whether they sustain the conclusion, we are asking what is, in my mind, an incomprehensible question, or more accurately, one that embodies incorrect presuppositions about the nature of knowledge. Similarly, if one asks about the correctness of the evidence and the conclusion, we reach the same conclusion, that the question presupposes an incorrect epistemology.

I have developed and often talked about my response to this, which is what I call 'the semantic principle', and what is at best a set of methodological presuppositions based on some opinions about the most effective function of a network. So I ask whether in the entities and connections in my thesis are diverse, autonomous, open and interactive. There's a longer discussion to be had here (what does it mean to say a concept is autonomous, for example? what does it mean to say that the parts of a thesis are diverse?) But it gets, I think, to a deeper understanding of epistemic adequacy that a query about the soundness and validity of an argument.

9. How do your findings fit with or contradict the rest of the literature in this field? How do you explain the differences of findings, or estimation, or interpretation between your work and that of other authors?

I've discussed a lot of this in my discussion above. But I have as yet remained silent on the difference between my own approach and the major approach in learning theory, or perhaps I should say 'set' of approaches, under the heading of 'constructivism'.

If I had to put the matter in a phrase, I'd say that constructivism is cognitivist and connectivism is non-cognitivist. Of course some people will immediately respond that there are some varieties of constructivism that are non-cognitivist. I typically respond, only half in jest, that for any criticism of constructivism C, there is a version of constructivism not-C, for an indefinitely large set of criticisms {C1...Cn}.

In fact, though, theory-building, model-building and representation all had their foundation in philosophy and the sciences well before their appearance in education, and the emergence of constructivism in education is a not-surprising response to (for example) behaviourism and instructivism, just as they were responses to logical positivism in the sciences. Bas van Frassen, for example, offers a prototypical account of 'constructive empiricism' in the sciences. Other flavours of constructivism are found in things like Larry Laudan's 'Progress and its Problems'. Or Daniel Dennett's 'The Intentional Stance'.

They are at once responses to scientific realism, grounding science in logical and social structures (there's a strong strain of this as well in Kuhn), and at the same time are responses to the discredited idea of 'the observation statement', which was science's only response to the idealist and the rationalist. Scientific constructivism was a way to preserve rationality in science, without surrendering its basis in empirical grounds. Only these grounds would be served by proxy, through an actuive engagement with experience, a process of construction, the formulation of what Quine called 'analytic hypotheses', the presentation of tentative conclusions, models and representations which would be evaluated as a whole against experience, against reality.

It's a brilliant response, and I have no real quarrel with the overall approach. My major criticism is that while they jettisoned the 'positivism' of logical positivism, they kept the 'logical' part, and it was in the logical part that logical positivism actually foundered. Indeed, Quine should have called his paper Two Dogmas of Logicism, for that's what they were. Specifically:

- The analytic-synthetic distinction fails not because there are no observations, but because there are no observation statements

- The principle of reduction fails not because there's no empirical basis in fact, but because there are no logical principles of reduction

And this is where I find my difference with the constructivists. In (almost) all cases, they depict the creation of knowledge as one of construction, where we (intentionally) create models or representations, grounding them in a model or environment (literally: "making meaning"). Often this is depicted as a social activity (sometimes, it is depicted as only a social activity). As a stigmergic activity, as I discussed above, I can comprehend it. But not as a theory of learning.

And the reason it is not a theory of learning is simply this: in a person (in a network, etc) there is no third party to do the constructing.

The creation of a model or representation (or network or theory or whatever) that will be tested against experience (or reality, or a run computer simulations, or whatever) is a representationalist theory which assumes a distinction between the model and whatever would act as evidence for that model (and, often, a set of methodologies and principles, such as 'logic' and 'language', for constructing that model).

But the network theory I've described, if taken seriously, entails the following:

- the network is self-organizing; we do not 'create' sets of connections, these result naturally from input and from the characteristics of the entities and connections

- the network does not 'represent' some external reality; 'evidence' and 'conclusion' are one and the same; the network itself is both the perceptual device and the inferring device

- learning isn't about creating, it's about becoming

Someone recently said to me, "well that makes you a radical constructivist". Perhaps. But now the meaning of 'constructivist' has ceased to be anything that would be recognized by most constructivists. This is an outcome that could be predicted by my own theory, but not by most others.

10. What are the main implications or lessons of your research for the future development of work in this specific sub-field? Are there any wider implications for other parts of the discipline? Do you have 'next step' or follow-on research projects in mind?

I have several things in mind, though life may be too short for all of them:

- I want to continue to develop in technology an instantiation of the concepts I describe in theory (understanding that there's no theory, etc., etc.). This is the basis for my work in MOOCs and my current work in personal learning environments, as instantiated in the LPSS Program. I want to see the various ways in which a learning network can grow and develop and help real people address real needs and make their lives better.

- I want to draw together the various threads I've described in this post and offer a single coherent statement of connectivism as I see it. Related to this, but probably a separate work, I want to draw out and make clear the elements of 'critical literacy', which I believe constitute the foundations (if you will) of a new post-cognitivist theory of knowledge (the program above has funding but I have no funding for this work, so as a message to society at large, if you ever want to see this, consider some means of funding it).

- I would like to see the principles of self-organizing knowledge applied to wider domains and to society at large, as (shall we say) a new understanding of democracy, one based not on power and control and collaboration and conformity, but one based on autonomy and diversity and cooperation and emergence. Society itself will have to do this; I can but point the way.

That's pretty much it, from an academic and professional perspective. But I also understand that the work is not possible in the confines of my own office working with texts and software. None of what I do today, nor indeed, have ever done, has been separate from the rest of my life and living. Each experience that I have, each society that I see, each new city and each new bike ride adds a nuance and a subtlety to my understanding of the world. It is a beautiful life and my greatest contribution to the future would be, I think, to continue living it.

That's my PhD oral exam. I'd like to say thank you on behalf of the group and ourselves and I hope we've passed the audition.