In this article, I will show you the deep reason why modern AI cannot behave like living beings. I will also discuss how to fix it. For the first time, you will learn about a new type of mathematical neuron from which you can build a new generation of AI that will be able to behave the way biological living organisms behave. Simply put, I will tell you what living AI will be created from.

The main feature of modern neural networks

A modern neural network is a mathematical model that copies the structure of a biological neural network. The main feature of these models is that they do not need to accurately recreate the physics of a biological process: the work of the mediator, ion channels and transmembrane proteins. The task of a modern neural network is to copy the functionality of a living neural network: transmission and basic signal processing.

How modern neural networks appeared

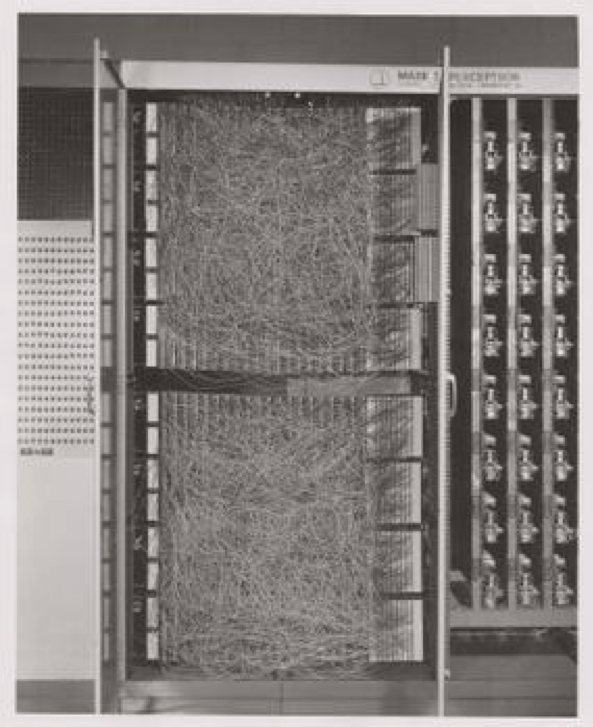

Simplifying the model of a real neuron to the level of a dendrite plus a neuron body plus an axon, Warren McCulloch, and Walter Pitts in 1943 created the concept of a mathematical neuron. In 1958 Frank Rosenblatt, based on the McCulloch-Pitts concept, built a computer program, and a little later the device became the perceptron from which the history of artificial neural networks began.

Please note that the mathematical model was based on knowledge of the structure of biological neural networks from the early twentieth century.

It is for this reason that the McCulloch-Pitts mathematical neuron has four main elements.

1- Input vector of parameters, which is a series of numbers coming to the input of the neuron and a vector of weights directly associated with it (programmers like to call this vector a weight matrix). It is this value that changes during the learning process and allows mathematical neurons to correctly respond to incoming signals. According to the idea of the creators, the vector of weights should simulate the effect of synaptic plasticity, which implements the learning process of the living nervous system.

2- An adder is a block of a mathematical model of a neuron that adds the input parameters multiplied by their weights.

3– Neuron activation function – determines the parameters of the output signal from the value received from the adder.

4- Subsequent neurons, the inputs of which receive a signal with the output value of this neuron. It is worth saying that if the described mathematical neuron is the last, then this element may be missing.

It is from mathematical neurons with this design that artificial neural networks are built, which in turn consist of several neural layers.

1- The receptor layer is a set of parameters. Although it is depicted in many diagrams as a neural layer, it is not actually made up of mathematical neurons. In reality, this is simply digital information captured by receptors from the surrounding world.

2- An associative or hidden layer is an intermediate structure, always consisting of full-fledged mathematical neurons, capable of remembering parameters and finding correlations and nonlinear dependencies. It is this layer (or many layers in more complex models) that can create mathematical abstractions and generalizations. In modern models, this is almost always a set of layers, each of which filters out a new feature vector for the next layer. It is layers of this type that are the most important part of any neural network, creating highly differentiated abstractions during the learning process.

3- The output layer is a set of mathematical neurons, each of which is responsible for a specific class or probability distribution function of an object belonging to initially defined subtypes. This layer usually contains as many neurons as there are subtypes represented in the training set.

All the magic of modern AI is concentrated in the presence of hidden associative layers. This is the mechanism through which an artificial neural network can build hypotheses based on finding complex dependencies in the source information.

If we briefly summarize all the above, we will see that training a modern neural network comes down to the optimal selection of weight matrix coefficients in order to minimize the probability of error.

Some programmers call the result of the neural network a hypothesis since this result shows the dependence on the parameters of the incoming information. The irony is that this dependence is the main obstacle preventing us from creating living AI.

Why?

The reason is very simple. At the beginning of the last century, science saw the neuron as a biological conductor with a resting potential of 70 millivolts and an action potential of 50 millivolts. The networks of these conductors formed the fields of brain activity, and it was in their configuration, as it seemed to us then, that the essence of the thinking process as a way of processing information was hidden.

At the end of the last century, assumptions appeared that all this was not true. In 2001, in the journal Experimental and Clinical Physiology and Biochemistry, I wrote a special article on this topic, in which I suggested that the basis for the plasticity of living neural networks is not the structure of the network as a set of synapses and neurons, but a special class of transmembrane proteins that form ion channels on the surface of each individual neuron.

Now this is no longer just a hypothesis, but a well-established scientific fact, which has recently received visual confirmation. Researchers from the Netherlands Institute of Neuroscience published a video of nerve plasticity in a moving axon.

Today we can say with confidence that all action potentials in the brain begin in one specific part of the neuron – the axon initial segment (AIS), which acts as a control center. The length of this segment can become shorter with high activity or longer with low activity, and the main players in this process are special transmembrane proteins that form ion channels. Moreover, the density and quantity of these proteins in this segment of the neuron can change very quickly (over several minutes).

What does this mean for AI?

New data suggests that neurons are not just signal conductors, but carriers of their own individuality, capable of changing their own attitude to the incoming signal in real time. This reveals to us the deep basis of our personal individuality – it shows how a matrix of preferences is formed from billions of individually special neurons.

The personality of each of us is millions of predetermined choices (some like red and don’t like black, like sweet and don’t like salty, and some vice versa). Each neuron in our brain is an individual player in a team that builds the deformation matrix of our personality.

The neural matrix allows us to act not in accordance with the program (algorithm), but in accordance with our attitude to sensory information (configuration of the preference matrix). Therefore, we can make mistakes and learn by acting in a completely unfamiliar situation.

The future of AI or how a living neural network will be created

Living AI will have a new mathematical neuron with a variable dynamic position function. This is an additional equation that determines the parameters of biological discreteness. This equation will simulate the functions of the axon initial segment (AIS) using the three-dimensional coordinate index of the current position of the neuron in the neural layer matrix. Thus, each of the hidden associative layers of the neural network will become a component of a three-dimensional preference matrix.

This will allow us to create a completely new structure – an individual neural matrix that will behave like a living structure. It will create and change its own attitude to sensory information and act (respond to sensory impulses) not only in accordance with the vector of weights, but also in accordance with the position of the mathematical neuron in the preference matrix and the local configuration of each associative neural layer.

The new type of AI will make mistakes and learn, gradually forming its own relationship to sensory information. AI will be able to create its own character – its own attitude to current events.

The AI will gradually build its own preference matrix by changing the position of mathematical neurons in the associative layers of the neural network. In this way, we will be able to create neural networks of a new matrix type capable of behaving and acting like living organisms.

In addition, the neural matrix will become an important element in the architecture of personal artificial intelligence. A new type of AI built around a living brain and, using a neurocomputer interface, capable of completely repeating (mimicking) the characteristics of a specific human personality.

AI based on matrix neural networks will become a digital form of life, turning from an object into an active participant in the reality around us.