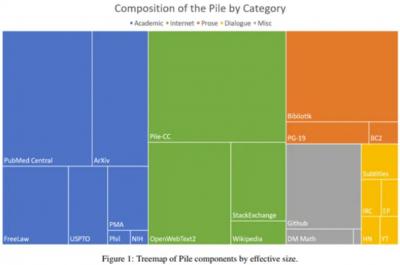

One thing I love about Mastodon is that I get to sit in on conversations like this one between Clint Lalonde and Alan Levine on open data sets used to train large language models. It's prompted by Lalonde asking whether there are other open data sets like Common Corpus (not to be confused with Common Crawl). This leades to an article about The Pile, an 885GB collection of documents aggregating 22 datasets including Wikipedia, ArXiV, and more. There's Semantic Scholar, which appears to be based on scientific literature, but also includes a vague reference to 'web data'. There's also the Open Language Model (OLMo).

Today: 0 Total: 450 [] [Share]