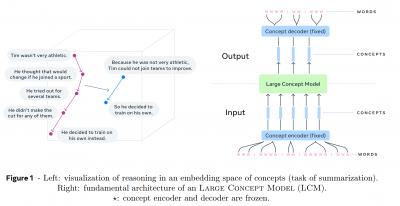

In philosophy there is something called the type-token distinction. A token is (say) the actual instance or use of a word, while the type is the abstract concept or idea it represents. This Meta paper offers a version of the distinction, saying that large language models are based on tokens, while human reasoning is based on concepts, ie., types. I think there are issues with this, but it doesn't matter, because what they're actually doing is something different again: "The human brain does not operate at the word level only. We usually have a top-down process to solve a complex task or compose a long document: we first plan at a higher level the overall structure, and then step-by-step, add details at lower levels of abstraction." Breaking things down into parts, though a very useful heuristic (known as the Cartesian method) has nothing to do with types and tokens. But that's OK, because that's not what they're doing either. Looking more closely at the paper (49 page PDF), it appears that they're setting up basic representations like frames or models and looking at a word's role within that. But that's not how humans think at all.

Today: 0 Total: 838 [] [Share]