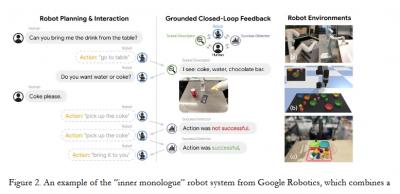

In a sense, neural networks have always been able to 'talk to themselves'. There is back propagation, for example, where feedback flows back through the layers of a neural network, correcting weights as it does. Or there are recurrent neural networks, where neural output is saved to become neural input, creating in effect cognitive loops. But 'talking to ourselves' or the idea of an 'inner voice' has always been thought to be something more abstract, definable only in terms of lexicons and syntax, like a formal system. This article (34 page PDF) grapples with the idea, considering it from a conceptual, theoretical and then practical perspective, running us through Smolensky's argument against Fodor and Pylyshyn through to things like the 'Inner Monologue Agent' from Google Robotics and Colas's language enhanced 'autotelic agent architecture'. "Instead of viewing LLMs like ChatGPTs as general intelligences themselves, we should perhaps view them as crucial components of general intelligences, with the LLMs playing roles attributed to inner speech in traditional accounts in philosophy and psychology."

Today: 0 Total: 790 [] [Share]